Mohamed Shawky Sabae

I am a PhD student in the Computer Graphics research group at Eberhard Karls University of Tübingen supervised by Prof. Dr.-Ing. Hendrik PA Lensch. I am also a scholar at the International Max Planck Research School for Intelligent Systems (IMPRS-IS).

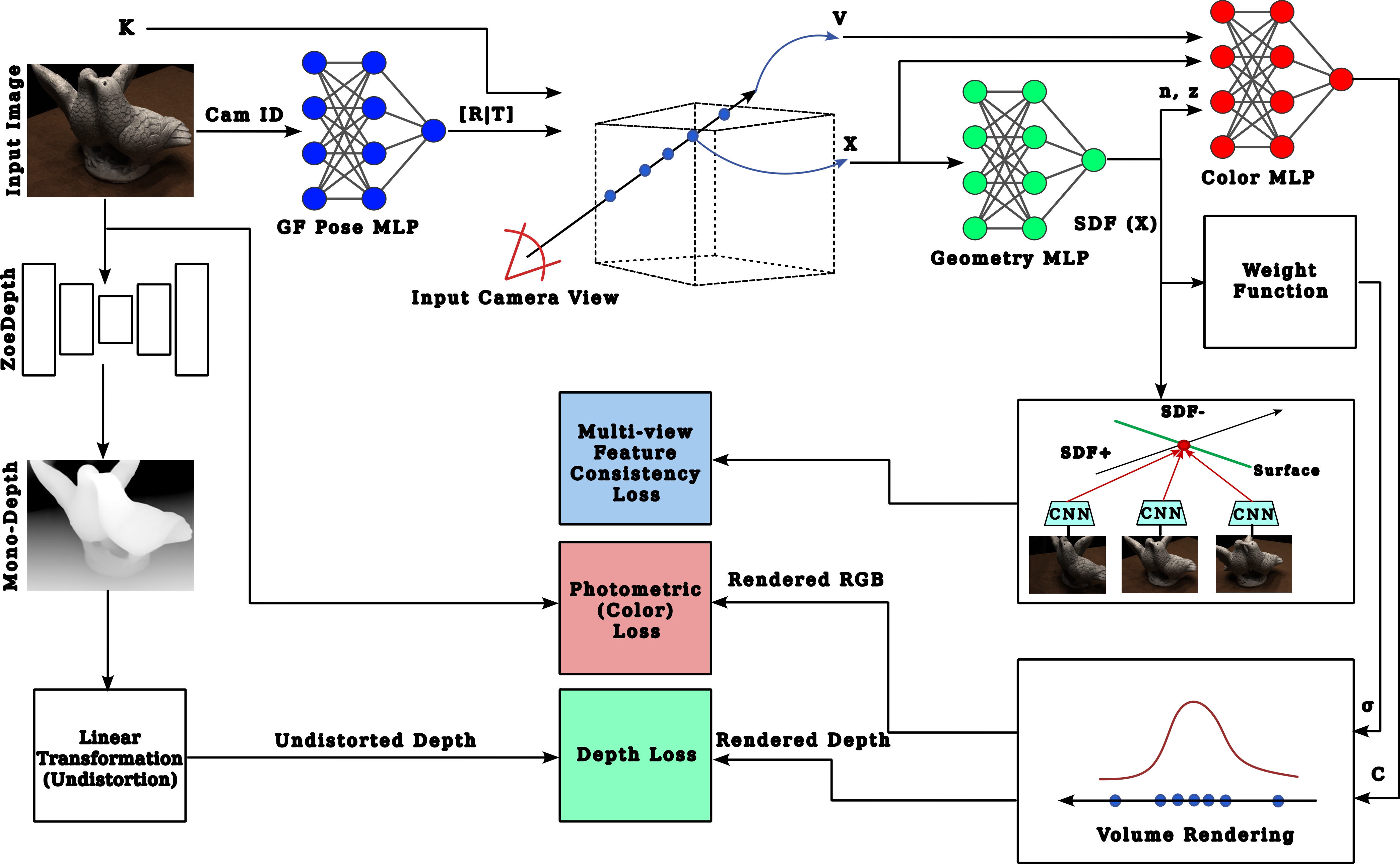

Research Focus: Inverse Rendering, Differentiable Rendering, 3D/4D Scene Reconstruction.